Mistral 7B, a 7.3 billion-parameter language model that outperforms Meta’s Llama 2, is making waves not only because of its impressive scale, but also because of its superior capabilities over its larger siblings . In this article, we’ll take a deep dive into the world of Mistral 7B, exploring its capabilities, achievements, and potential applications.

A rising startup

Mistral AI, a Paris-based startup founded by alumni of tech giants Google DeepMind and Meta, rose to prominence earlier this year with a distinctive WordArt logo and a historic $118 million in seed funding. The funding, the largest seed round in European history, thrust Mistral AI into the spotlight.

The company’s mission is clear: “Make AI useful for business” by leveraging public data and customer contributions. With the launch of Mistral 7B, the company is taking an important first step toward achieving this mission.

Mistral 7B could be a game changer

Mistral 7B is no ordinary language model. With its compact 7.3 billion parameters, it outperforms larger models such as Meta’s Llama 2 13B, setting new standards for efficiency and power. The model offers a unique combination of features and performs well on English language tasks while also demonstrating impressive coding capabilities. This versatility opens the door to a variety of enterprise-focused applications.

One noteworthy aspect of Mistral 7B is its open source nature, released under the Apache 2.0 license. This means anyone can fine-tune and use the model without restrictions, whether for on-premises or cloud-based applications, including enterprise scenarios.

Apache 2.0 License

For example, by using software licensed under the Apache 2.0 License, end users are guaranteed a license to any patents covered by the software. Secure and powerful open source software guaranteed to be readily available under the Apache 2.0 license.

How to use Mistral 7B

Under the Apache 2.0 license, Mistral 7B can be used without restriction in the following ways:

Benchmarks speak louder than words

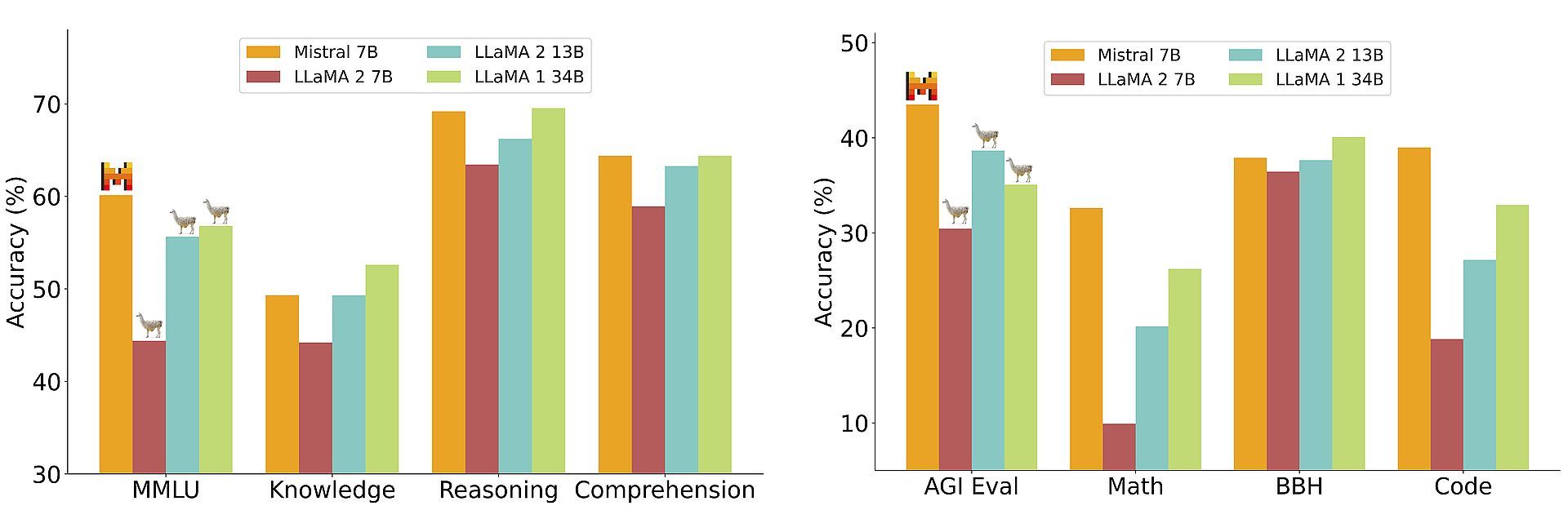

Even though the Mistral 7B has only just arrived, it’s already proven itself in benchmark tests. In head-to-head comparisons with open source competition, the model consistently performs well. It easily defeated the Llama 2 7B and 13B, demonstrating its prowess in a variety of tasks.

Key benefits of Mistral 7B include using Grouped Query Attention (GQA) for lightning-fast inference and Sliding Window Attention (SWA) to process longer sequences without incurring significant computational cost. This innovative approach improves its performance across the board.

Unleash cost-effective efficiency

An interesting aspect of the Mistral 7B’s performance is its cost-effectiveness. By calculating the “equivalent model size”, we can appreciate the memory savings and throughput improvements. In terms of reasoning, comprehension and STEM reasoning, Mistral 7B performs on par with the Llama 2 model at three times its size.

This makes it an attractive choice for resource-efficient applications.

Looking to the future

To demonstrate the adaptability of Mistral 7B, the model was fine-tuned on HuggingFace’s publicly available instruction dataset, demonstrating its impressive generalization capabilities. This fine-tuned model, called Mistral 7B Instruct, outperforms other 7B models on MT-Bench and is comparable to the 13B chat model. This achievement hints at the potential of this model in a variety of professional applications.

Mistral AI looks forward to working with the community to build guardrails that ensure responsible and moderated output. This commitment aligns with broader industry trends in ethical AI.

In summary, Mistral 7B represents a significant leap forward for language AI models. With its compact size, open source nature and outstanding performance, it promises to change the way enterprises leverage artificial intelligence for a wide range of applications. As Mistral AI continues to innovate, we can foresee greater progress in the field of artificial intelligence.